Academic publishers worldwide are one of the three components of todays’ research ecosystem including funders and researchers. Workload pressure on the individual researchers has been growing since many years, including from the publishing perspective. Growing competition and the allure of easier funding applications comparison made the funders lean towards numerical metrics of researcher evaluation. It’s a lot more comfortable and easier to decide if a researcher is worth the funding in question with a ‘universal’ numerical measure at hand.

Although it is understandable that funding committees were and are looking for ways to compare researchers easily, the ease of using the Impact Factor (IF) or H-index (of the contestant’s publications) is not as fair as initially thought. Especially across different fields of research. Much has been written about this and generally the publication metrics are rather controversial when it comes to research evaluation.

The consequences of this practice are here for a while and accelerate the strain with which researchers function as a part of the ecosystem.

In this case, the chicken was here before the egg - researchers act and behave to be rewarded by the incentive system they are part of - more papers means (statistically) more citations, potentially leading to more funding.

And some publishers harness this system to their own benefit. After all, non-profit or not, at the end of the day the numbers matter to their survival. Recent study of Mark A. Hanson et.al. shows that some tap into the power of special issues - invited form of publication with short time to publication, going deep into a particular topic or emerging research area.

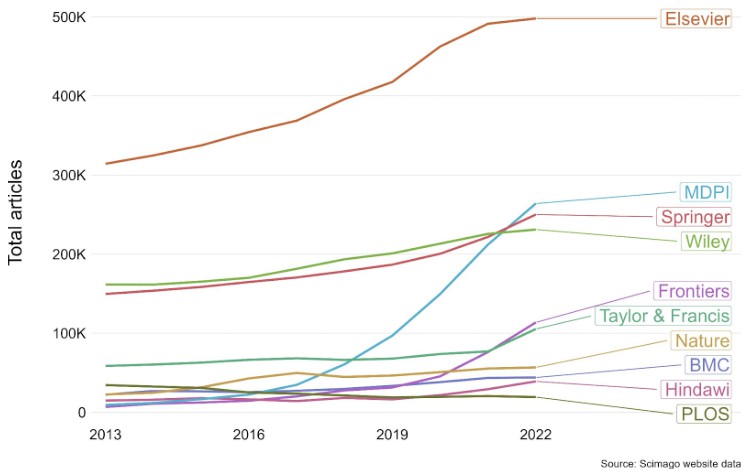

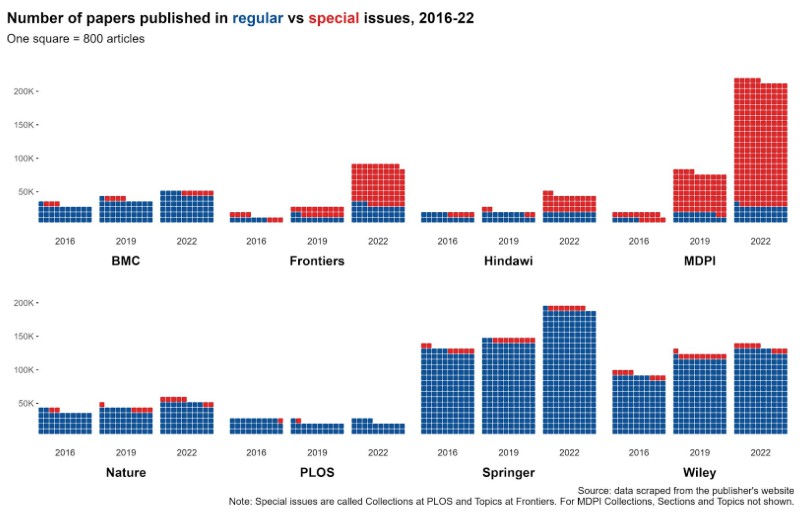

Seeing the recent statistics of the number of articles published by major publishers, one cannot miss the exceptional growth of published papers of MDPI or Frontiers. Looking at another metric - the distribution of normal articles and special issues, one gets a good indication behind the steep increase of total MDPI output.

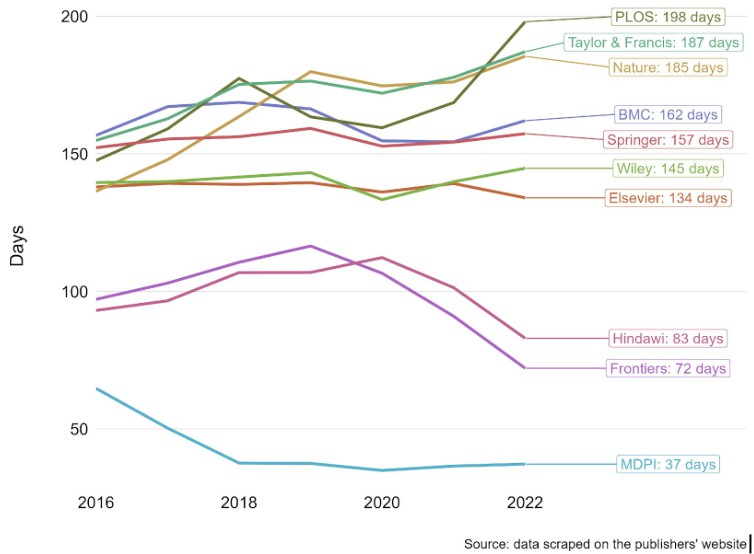

Another component of the rapid increase in total papers published is the time to publication (including the revisions). The graph below indicates a clear lightning turn around of the mentioned mega-journal.

Following these figures, no wonder some MDPI journals were removed from the Clarivate list and won’t be able to show their impact factor.

While many other publishers are gaming the numbers game one way or another, some get penalized when they find a way to outperform others. While it is condemnable and self-serving to publish for its own sake (or profit), or in case of publishers to grow their numbers, it is hard not to see the impossibility of the whole IF and other numbers game altogether.

Universities are increasingly adopting the narrative evaluation of researchers but the momentum of the numbers game is still strong. Therefore, pointing at MDPI to be too numbers oriented is a fair point, but hopefully others do take this as a reminder to examine their own activities and motivations. IF is a measure bringing more harm than benefit and supports behavior MDPI shows. Sure, other publishers may keep their publishing practices in check, but until when? My suggestion would be - stop the IF and other numbers game altogether.. What do you think?

Images are courtesy of Mark A. Henson from a paper:

https://arxiv.org/abs/2309.1588 4

#GradSchool #ECRchat, #research, #AcademicLife, #PeerReview, #AmReading, #PhDchat, #MakePublishingGreatAgain #Frelsi #publishtoflourish #ScholarsShare #PublishOrPublish, #Professor, #AcWri

You can comment when you sign in.